Google has a utility to convert glTF files (a 3D transmission format for the web) into the USDZ format (a 3D format released by Apple that is based on the USD format from Pixar). Unfortunately, if you want to use this utility you need to build it from the source code. This one is going to get really technical, but putting together all of these pieces took me quite a bit of time so I figured these directions would be useful to others! If you want to build the usd_from_gltf project on Windows WSL using Debian, read on!

Why?

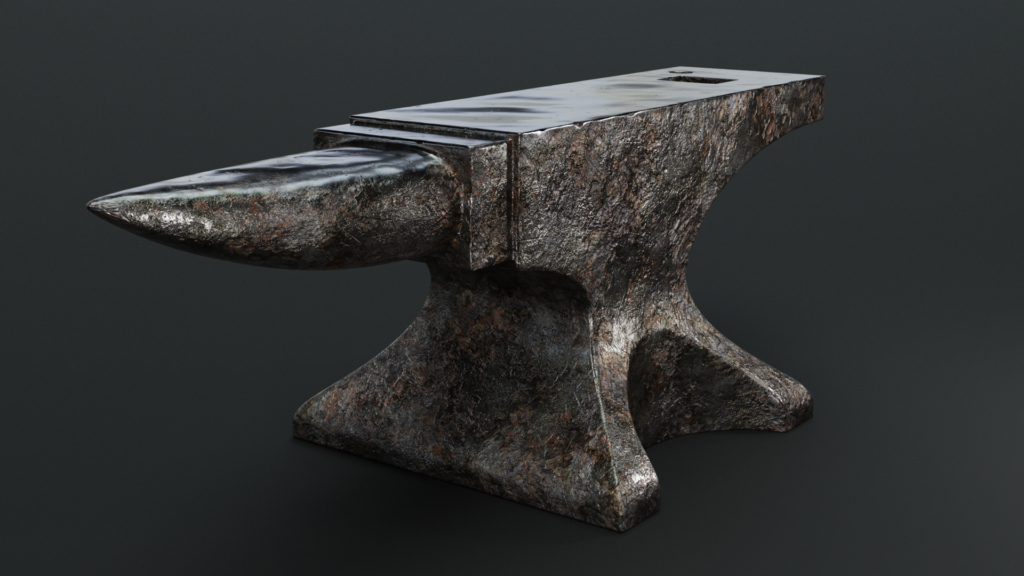

I recently learned that Apple platforms (Mac, iPhone, etc.) can send and receive 3D objects and use them directly in Augmented Reality (AR) mode. If you have an Apple device, you can see what I mean by visiting the Apple Augmented Reality Quick-Look page; try clicking on one of the 3D models and allowing your browser to access your camera. The 3D model (and any associated animations) can be placed in-camera into the environment that you are viewing!

But of course, there is a catch – the file needs to be in a USDZ format. What is USDZ? It’s a format Apple designed that is based on another format, USD, which Pixar has made available to the 3D community. USD is gaining popularity and adoption as an interchange format for 3D pipelines, because it supports non-destructive editing, different views, opinions, and even allows different parts of the pipeline (modeling, lighting, animation) to be worked on independently.

But USDZ isn’t really compatible with USD, it just uses a subset of USD features and then packs the files into a completely different file format (ZIP files with custom byte alignment, so it’s not even really compatible with normal ZIP files, either – of course).

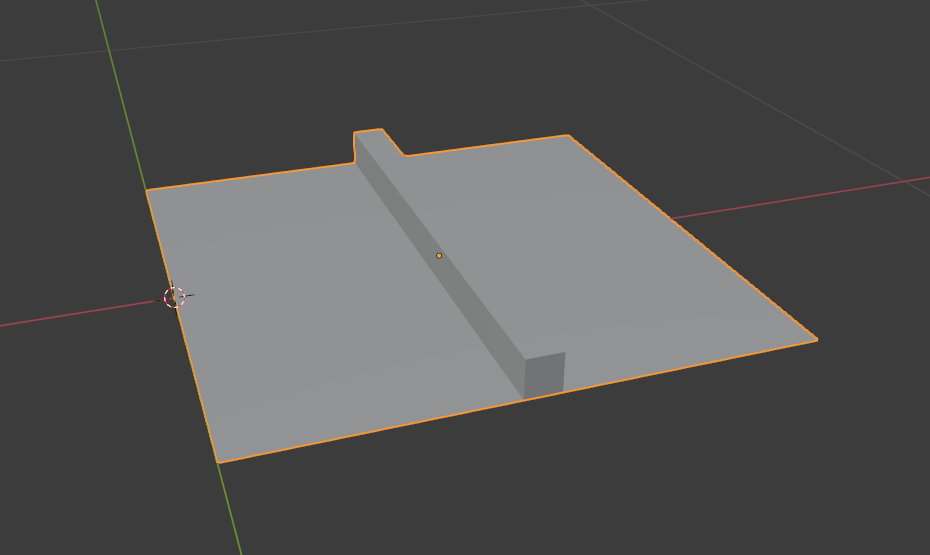

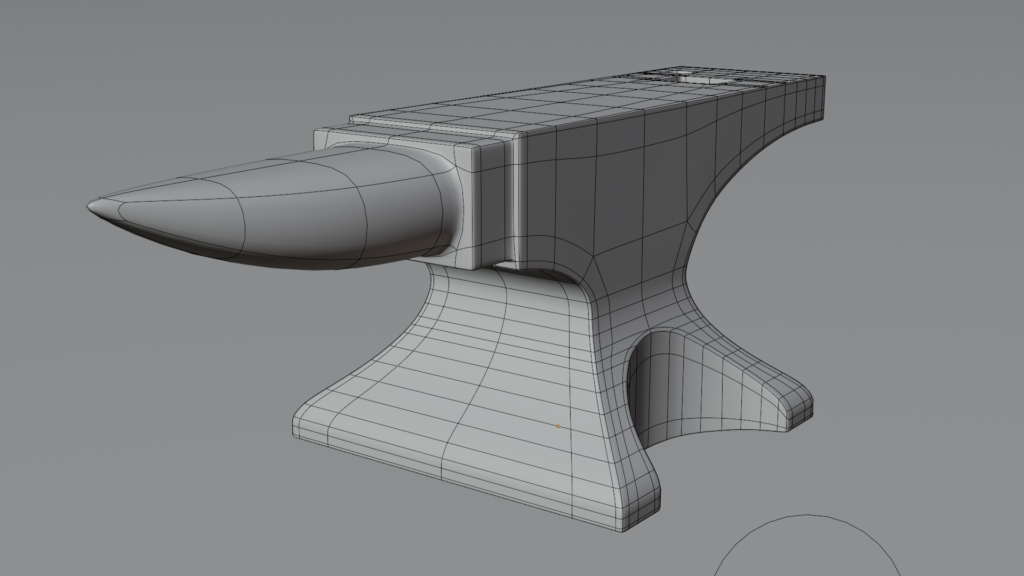

And I primarily use Blender for 3D objects, which only supports USDA and USDC (the formats defined by Pixar). So if I want to export an object to USDZ from Blender, I am out of luck – at least, if I want to directly export the files.

Fortunately, Blender also supports exporting to glTF, which is a format designed for 3D models on the web, especially mobile devices. The goal of glTF is to be the “JPG of 3D”, designing the format to be easily consumed by browsers with minimal processing.

And Google released an unofficial utility to convert from glTF to USDZ. So I decided to build it, figuring that it couldn’t be too hard to compile a simple conversion utility. After hours of trying different compile steps, I finally got lucky and hit the magic combination — here are the steps!

Continue reading “Compiling the glTF to USDZ Converter”